Imagine your website transformed into a conversational powerhouse. Visualize how users can ask questions in natural language and get instant, personalized answers like they were from you in person. Your website can understand the user and guide them. That’s the promise of NLWeb, Microsoft’s groundbreaking open-source protocol unveiled at Build 2025. Designed to integrate AI chatbots and natural language interfaces, NLWeb empowers businesses, news agencies, and developers to create AI-powered knowledge hubs with just a few lines of code. Whether you’re enhancing user engagement on an e-commerce site, enabling news agencies to control their content, or pioneering blockchain-based AI agents for code licensing, NLWeb is a potential new installable gateway to the agentic web. Microsoft’s announcement as one of the top 5 announcements highlights NLWebs potential to redefine web interactions, making it a must-try tool for 2025.

The key question is about NLWeb is: Does NLWeb hold its promises and how difficult is it to setup? In this article, we share our experience.

- What’s this article about?

- Why should you care about NLWeb?

- The future web is blockchain and intelligent

- NLWeb Use cases with economic needs

- How to turn any Website into an AI-Powered Knowledge Provider

- How to Optimize your website for NLWeb AI according to A-U-S-S-I

- Deploying Your NLWeb Ai knowlege Server

- Including NLWeb in Your Webpage

- Future Outlook for NLWeb

- Current Challenges

- Conclusion – what we think about NLWeb

- FAQ

What’s this article about?

Technically readers will discover NLWeb’s potential through practical setup steps, learn about NLWeb data optimization techniques using the A-U-S-S-I framework, and deployment on Azure with Docker. On a logical level, we explore use cases for news agencies combating AI crawler restrictions and blockchain-based AI agents for code licensing, alongside code generation examples for logistics and software license management. The article critically evaluates NLWeb’s strengths, such as its flexibility and automation capabilities, against challenges like technical complexity and inconsistent AI outputs.

Ultimately, you as a reader gain insights into NLWeb capabilities which can help your website in the future, including internationalization, voice search, and custom UI generation.

The article the central goals: You know what NLWeb is, what it is sued for and you can decide if it is the current development state the right tool for you. If it is for you, the article conatins the information and scripts that you can unlock the power of NLWeb for your website and use case.

Why should you care about NLWeb?

NLWeb enables websites to deliver interactive, AI-driven experiences through natural language interfaces. Now imagine all your data is absorbed into the big AIs. Nobody will come for your specific knowledge anymore to your website. Like many news agencies you will likely block AI crawlers.

Wired is reporting that 88% of top news outlets block AI crawlers to protect content. Just imagine the potential that NLWeb enables publishers to host their own AI systems, ensuring data control while offering users natural language access to archives. This specialized AI approach aligns with niche solutions, similar to targeted SaaS platforms, allowing organizations to maintain autonomy over their data.

Now, imagine you make a specific AI for your expertise on your website only and this AI is only available on your site. Your site is an intelligent AI app now – a real reason for people to visit your website.

In addition, you can get new customers and visitors. Furthermore, imagine future generations. The next generation is said to used voice machine interaction in magnitudes of today. NLWeb’s conversational interface also aligns with voice search trends, critical as 50% of searches may be voice-based by 2026. Ultimately, research, such as a 2025 Gartner report, indicates that 70% of enterprises will adopt conversational AI by 2026 With NLWeb, docking your website to voice search is just a minor step. One does not want to miss out on this race.

The future web is blockchain and intelligent

Our perspective at iunera focuses on blockchain AI agents leveraging schema.org actions, which define structured interactions akin to HTML forms but for AI-driven tasks.

We see the agentic web as an evolution where AI agents perform actions, like licensing code via blockchain smart contracts. Our “sematic transactional” viewpoint draws on semantic web principles that were heavily researched before the age where AI became mainstream and hip. For those of you who know that research, just remember the potential and use cases of RDF (Resource Description Framework), OWL (Web Ontology Language), and triple stores for structured data representation that were proposed by researchers in the past. Research from MIT’s Semantic Web Group suggests that semantically rich data enables machines to reason and act, a vision NLWeb could advance by combining large language models with accessible interfaces OpenTools. – With much less effort that the sematic web idea was for the end user.

Historically, the semantic web, detailed in Tim Berners-Lee’s 2001 vision, aimed to make web data machine-readable for automated reasoning. NLWeb could partially realize this by enabling websites to act as semantic reasoning engines, where users query offerings or execute transactions via AI.

For example, a company’s site could respond to “What services do you offer?” with structured data, processed by NLWeb’s AI, akin to RDF-based queries.

NLWeb’s impact hinges on adoption. It could empower small businesses with cost-effective AI, publishers with controlled content access, developers with innovative tools, and users with intuitive interfaces—or it may struggle like earlier semantic web efforts. “NLWeb’s success depends on community-driven innovation.” Researchers, businesses, and developers must experiment to determine its place in the evolving web.

So – What is the big thing of NLWeb?

In short, we think it is finally the return of the semantic web vision that has high potential of adaption this time!

NLWeb Use cases with economic needs

Economic pressures or potential normally forces the utilization of new technologies.

Two key players are feeling economic pressure. News agencies are feeling intense pressure from social media and Ai crawlers and blockchain projects can immensely profit from Ai to gain mainstream adoption. let’s look into those a bit deeper:

NLWeb for News Agencies to “Survive AI crawling”

News agencies face increasing challenges. Wired is reporting that 88% of top news outlets, including Reuters and The New York Times, block AI crawlers to protect their archives from unauthorized scraping. Public voices say lik @TechInsider on X say: “Publishers are restricting AI bots to safeguard their content.”

The business model of use agenices is to get the users on the page and then shwoing them ads. With AI crawlers the users see the summery in the generative AI and never visit the page. NLWeb empowers publishers to create proprietary AI knowledge bases. This approach, highlighted in Microsoft’s NLWeb announcement, allows news agencies to host their own AI-driven interfaces, ensuring data ownership and delivering tailored user experiences.

NLweb enables news outlets to integrate AI chatbots that process natural language queries, such as “Summarize 2024 election coverage” or “Find articles on climate policy,” directly from their archives OpenTools. Unlike external AI platforms that may profit from scraped data, NLWeb keeps content in-house, aligning with GDPR and copyright regulations (Forbes).

This opens even new opportunities. Users can interact conversationally, increasing time spent on site what enables agencies to run more ads. But it is not ending her: They can also offer premium AI-driven features, like personalized news summaries, to subscribers.

Early adopters like Chicago Public Media are exploring such use cases, as noted in Microsoft News.

This way, NLWeb offers news agencies a path to reclaim their content’s value, providing a controlled, user-friendly way to engage audiences while addressing AI ethics concerns. As the web evolves, this technology could redefine how news is consumed and monetized.

Last but not least, imagine the potential for news as a whole. Customized podcasts, recomposed content and voice search enable completely new business model for news agencies. From a pure text on paper a news agency can speak with a voice to their readers, providing in future generated content with advertisement hints, fitting the current listener.

Distributed Blockchain Apps (Agentic – DApps) with NLWeb

At license-token.com, our journey with NLWeb stems from a desire to re-imagine digital ownership and interaction, moving beyond our initial license-token model to a broader vision of AI-powered knowledge bases.We see NLWeb as a bridge to the agentic web, where AI not only processes information but also performs actions via blockchain. This aligns with our belief that blockchain AI agents, powered by schema.org actions, could be the “killer app” for decentralized applications, as explored in Circle’s blog.

Schema.org actions define structured interactions that go beyond HTML forms. While HTML forms collect input and dApps execute blockchain transactions, agentic web forms enable AI to perform complex tasks (e.g. understanding the users search intent beyond buying products with NLWeb and using it for complex task like negotiating and procuring software or data licenses).

Imagine now that Schema.org actions are used to describe what blockchain actions do. A distributed intelligent agentic web would be possible. Imagine enabling richer data interactions and the more and more intelligent reasoning in a combination of sematic annotated blockchain actions and agentic behaviour.

A personal example is our license-token approach. Our original license-token approach focused on tokenizing digital assets, but we recognize today that the real potential is to combine the actions that our approach offers on blockchain are most valuable when they are paired with paired with AI’s accessibility, because this allows embedding the actions in different use cases.

How to turn any Website into an AI-Powered Knowledge Provider

Implementing NLWeb transforms your website into an AI-powered knowledge hub, enabling conversational interfaces with minimal setup. At least that is that promise. let us try it out:

This guide, based on real-world experience and the Microsoft NLWeb Hello World example, walks you through cloning the repository, configuring APIs, setting up a vector store, importing data, and running the app in intelligent mode. Screenshots and troubleshooting tips ensure clarity, aligning with Microsoft’s documentation and community insights Dev.to. Hence, you should be able to follow that guide and get the same NLWeb app running yourself.

Step 1: Set Up Your NLWeb Environment on your computer

Begin by cloning the NLWeb repository and creating a virtual environment to isolate dependencies.

- Clone the Repository:

git clone https://github.com/iunera/NLWebcd NLWeb - Create a Virtual Environment:

python3 -m venv myenvsource myenv/bin/activate - Install Dependencies:

cd codepython3 -m pip install -r requirements.txt - Copy Environment Template:

cp .env.template .env

This setup, detailed in GitHub’s Getting Started guide, ensures a clean environment. For Homebrew users, replace the pip command with python3 -m pip install -r requirements.txt.

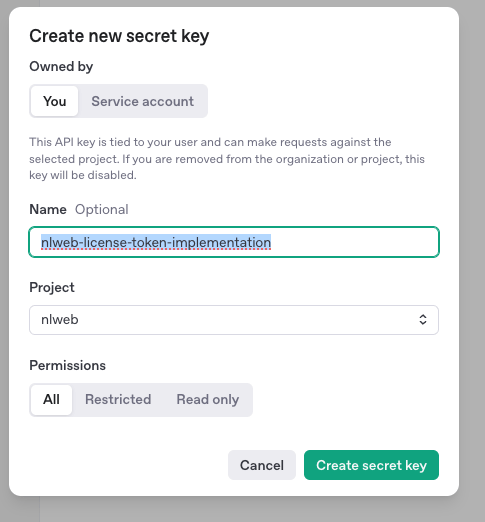

Step 2: Configure OpenAI API Key

NLWeb requires an AI model for processing queries. We’ll use OpenAI, as it’s widely supported OpenAI Platform.

- Create an OpenAI Project: Visit platform.openai.com, create a new project, and generate an API key.

- Add Key to

.env: Opencode/.envand insert:OPENAI_API_KEY=<your-api-key> - Configure LLM Settings: Edit

config_embedding.yamlandconfig_llm.yamlin thecode/configdirectory:preferred_provider: openai

This step ensures NLWeb uses OpenAI’s models for natural language processing, as recommended in TechCrunch.

Step 3: Set Up Azure AI Search as Vector Store

NLWeb uses a vector store for efficient data retrieval. We’ll configure Azure AI Search, a robust option Microsoft Azure Documentation.

- Create Azure AI Search Service: In your Azure portal, create a search service (free tier is sufficient for testing).

- Retrieve Service URL and Admin Key: Find the URL (e.g.,

https://nlweb-db1.search.windows.net) and admin key in the Azure dashboard. - Update

.env: Add tocode/.env:AZURE_VECTOR_SEARCH_ENDPOINT=https://nlweb-db1.search.windows.net AZURE_VECTOR_SEARCH_API_KEY=<admin-key> - Configure Retrieval: Edit config_retrieval.yaml:

preferred_endpoint: azure_ai_search

Create the Azure Search services like shown in the following:

Note:

For enterprise setups, use user-assigned managed identities instead of admin keys, as advised in Azure’s security guide.

Step 4: Import Data to Azure AI Search

Load your website’s data into the vector store to enable AI queries.

- Run Import Command:

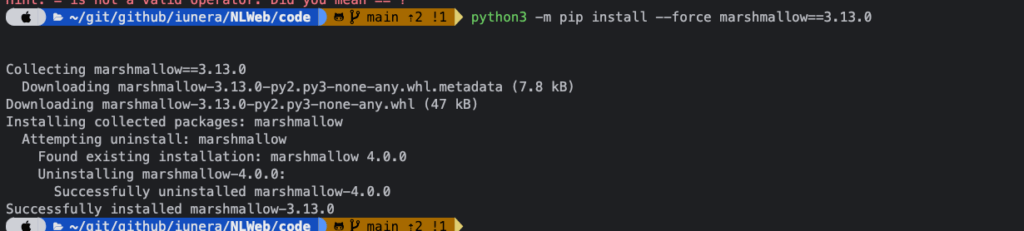

python3 -m tools.db_load https://www.license-token.com/rss/articles?limit=1500 License-Token-Wiki - Troubleshoot Dependency Issue: If you encounter a marshmallow error, force-install version 3.13.0:

python3 -m pip install --force marshmallow==3.13.0

Update requirements.txt to reflect this.

This step, validated by OpenTools, ensures your data is query-ready.

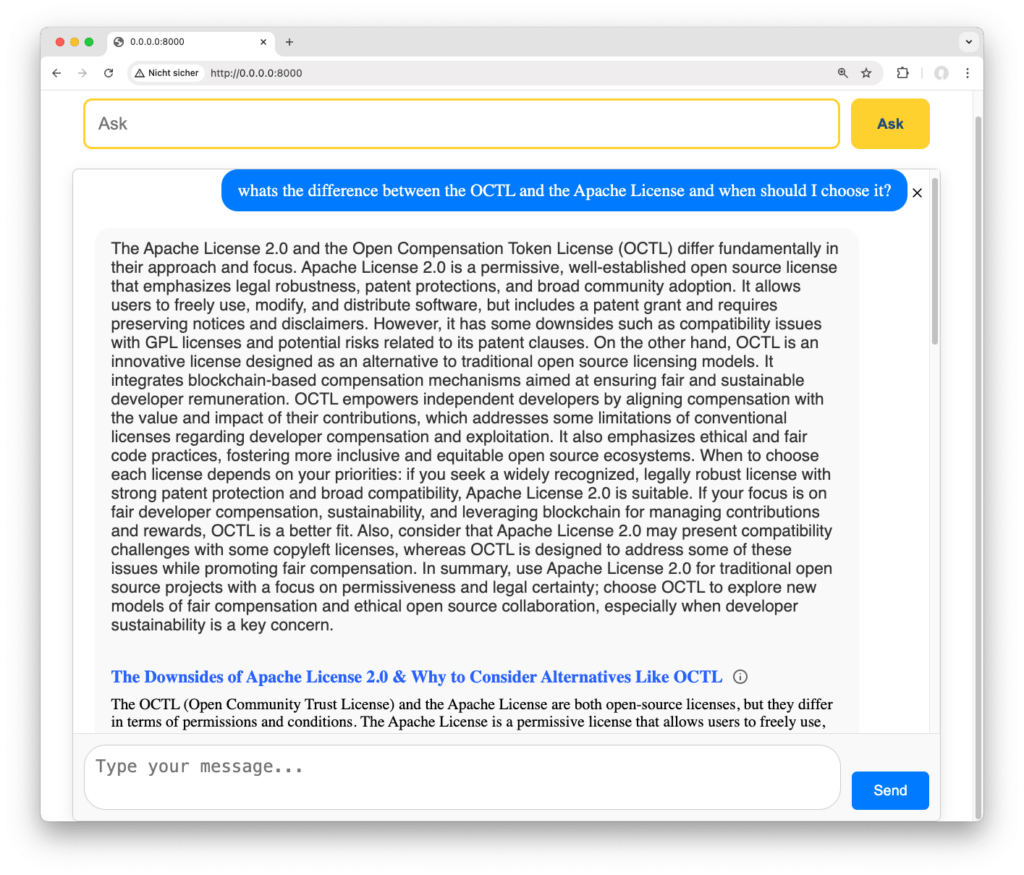

Step 5: Run NLWeb App in Intelligent Mode

Switch NLWeb to intelligent mode for conversational, context-aware responses, ideal for knowledge bases or blockchain queries.

- Modify index.html: In

static/index.html, change ChatInterface from list to generate:<ChatInterface mode="generate"> - Start the App:

python3 app-file.py - Test Queries: Access the app locally (e.g., http://localhost:5000) and test queries like “What’s in the License-Token-Wiki?”

This configuration, shifts NLWeb from search-like to LLM-driven outputs, enhancing user interaction. Hence asking your NLWeb ask box is now like asking a normal AI – the website is a knowledge base now.

How to Optimize your website for NLWeb AI according to A-U-S-S-I

What content structure works best for NLWeb?

Best practice for NLweb is A-U-S-S-I

- A ccessible

- U nderstandable

- S tructured

- S sematic

- I nterlinked

NLWeb thrives on data that is machine-readable, logically organized, and contextually rich. The A-U-S-S-I principle beats here Google E-E-A-T(Demonstrated expertise with practical steps and troubleshooting, referencing real-world use cases).

A-U-S-S-I content is AI ready content can be more imagined in the form of creating a wiki where all data is organized semantically and labelled. Articles are referencing another, instead of huge articles. Small and understandable interlinked pieces work better then large chunks. For local AIs authority with expertise is not required as you are the owner of your own NLWeb interface. Ultimately, A-U-S-S-I is the opposite of this article: Short content, single topic, concise and precise to the point.

Sticking to A-U-S-S-I ensures your content is AI ready for NLWeb process, reason, and deliver accurate responses. Let’s look how we apply the A-U-S-S-I priciple for NLWeb in practice:

1. Accessible: Make Data Available for NLWeb Indexing

Accessible data is the foundation for NLWeb’s indexing. RSS feeds are a primary source, providing a standardized format for dynamic content like blog posts, news articles, or software updates RSS Specification.

- Generate an RSS Feed:

- Use WordPress’s built-in RSS WordPress RSS Guide or plugins like WP RSS Aggregator.

- For non-CMS sites, create feeds with Python’s Feedgen or manual XML.

- Example: Host a feed at

https://yourwebsite.com/rssfor articles, products, or code repositories.

- Optimize Feed Content:

- Include

<description>tags with summaries,<category>for topics, <pubDate> for freshness, and <link> for source URLs. - Example:

- Include

<item>

<title>GPL License Guide</title>

<link>https://yourwebsite.com/gpl-license</link>

<description>Understand the GNU General Public License...</description>

<pubDate>Fri, 23 May 2025 09:00:00 GMT</pubDate>

<category>Software Licensing</category>

</item>- Validate and Test:

- Validate with W3C Feed Validator.

- Test NLWeb import:

python3 -m tools.db_load https://yourwebsite.com/rss Your-Content-NameNLWeb GitHub.

2. Understandable: Structure Content for AI Reasoning

NLWeb’s AI needs clear, logical structures to interpret and reason over content. Well-organized data helps machines understand relationships and rules, aligning with semantic data structring principles.

- Use Logical Structures:

- Employ lists, tables, and FAQs to present information clearly. For example, a table of software licenses helps NLWeb parse terms and conditions.

- Write rules explicitly, e.g., “If a license is GPL, it requires source code sharing,” in a dedicated section or FAQ.

- Table Example:

- Linking Explanation: The Category column links to category pages (e.g., /open-source), and Product links to product pages (e.g., /codegen-v1). These internal links help NLWeb understand relationships, like “CodeGen v1 belongs to Open-Source,” enabling queries like “Show open-source software with the MIT license” to return relevant results. Use schema.org/Product to define these links semantically W3C Schema.org Overview.

| Product | License | Category | |---------------------------------------------------------|--------------------------|----------------------------------------------------| | [CodeGen v1](https://mynlwebsite.com/products/codegen) | [MIT](link to license) | [Open-Source](https://mynlwebsite.com/open-source) | | [SecureAPI](https://mynlwebsite.com/products/SecureAPI) | [Apache](link to license)| [Enterprise](https://mynlwebsite.com/enterprise) |

- Stick to Standards:

- Use HTML5 semantics for

<article>,<section>, or<table>. - Link external logic, e.g., “Licensing follows FSF GPL standards.”

- Use HTML5 semantics for

- Use Descriptive Alt Text:

- For visuals (e.g., codegen-screenshot.png), use alt text like “Screenshot of CodeGen v1 interface, showing code generation for Python, referenced in software licensing guide” to clarify context.

- Example: “Diagram of MIT license terms, illustrating permissive use. One can see that different actors can apply the software without restrictions”

- Ensure Clean HTML:

- Avoid JavaScript-heavy rendering that obscures content Google Webmaster Guidelines or provide a clean written form in addition for NLWeb ingestion.

- SEO Benefit: Logical structures improve AI accuracy and user dwell time, boosting rankings.

- Example: A code generation platform’s table of generated scripts (e.g., “Python script for API”) enables NLWeb to answer “Compare licenses for generated code,” linking scripts to license categories.

3. Structured and Semantic: Enable Contextual Understanding

Structured, semantic data ensures NLWeb can query and reason over content, supporting AI-powered website functionality and semantic web goals.

3.1 Structured Semantic Data with Schema.org

Schema.org provides machine-readable context, critical for NLWeb’s agentic capabilities. Use them to make your content better understandable:

- Choose Schemas:

- News: NewsArticle for headline, datePublished, author.

- E-commerce: Product for name, price, availability.

- Software: SoftwareApplication for name, softwareVersion, license Schema.org/SoftwareApplication.

- Example:

<script type="application/ld+json">

{

"@context": "https://schema.org",

"@type": "SoftwareApplication",

"name": "CodeGen v1",

"softwareVersion": "1.0",

"license": "MIT"

}

</script>- Embed and Validate:

- Use JSON-LD in HTML Google Structured Data Guide.

- Validate with Google’s Rich Results Test.

- Use Case: A software site with SoftwareApplication schema enables NLWeb to answer “Find MIT-licensed code generators” accurately.

3.2 Use JSONL for Structured Custom Data

JSONL is ideal for custom datasets, including metadata NLWeb GitHub.

{

"id": "1",

"title": "CodeGen v1",

"content": "Generates Python scripts...",

"metadata": {

"license": "MIT",

"category": "Code Generation"

}

} {

"id": "2",

"title": "SecureAPI",

"content": "API security tool...",

"metadata": {

"license": "Apache",

"category": "Security"

}

}- Prepare and Import:

- Include title, content, metadata fields. Use Python’s JSON library.

- Import:

python3 -m tools.db_load /path/to/software.jsonl Software-Dataset.

3.3 JSON Actions for Agentic Interactions

JSON actions, often based on Schema.org/Action, define executable tasks, enabling NLWeb to perform actions like licensing or code generation W3C Schema.org Overview.

- Define Actions:

- Use

LicenseActionfor software licensing or custom actions for code generation. - Example:

- Use

{

"@context": "https://schema.org",

"@type": "LicenseAction",

"object": {

"@type": "SoftwareApplication",

"name": "CodeGen v1"

},

"result": {

"@type": "CreativeWork",

"license": "MIT"

},

"agent": {

"@type": "Person",

"name": "User"

}

}- Integrate with NLWeb:

- Store actions in JSONL or embed in HTML as JSON-LD.

- Import: p

ython3 -m tools.db_load /path/to/actions.jsonl Actions-Dataset

- Use Case: A blockchain platform uses LicenseAction to enable “License this script under OCTL,” triggering a smart contract Circle Blog.

3.4 Semantic FAQs

FAQs clarify content for NLWeb and users and can be understood as good as snippets in traditional search.

- How: Create question-answer pairs, e.g., “What is a GPL license?” Use FAQPage schema.

- Example: “What is code generation? Creating scripts automatically, like CodeGen v1’s Python outputs.”

4. Interlinked: Connect Content for Meaning

Interlinked content enhances NLWeb’s understanding.

- Internal Linking:

- Link related content, e.g., from a code generation article to a licensing guide, using anchors like “Explore MIT licenses.”

- Use tags (e.g., “Code Generation,” “Licensing”) and categories to group content, avoiding redundant articles.

- External Linking:

- Reference sources relevant to your topic that the AI can the terminology and context better.

- Update Content:

- Mark updates with <lastmod> in sitemaps or dateModified in Schema.org Google Sitemap Guide.

5. Test and Validate Data

Ensure data compatibility with NLWeb OpenTools.

- Validate:

- Use RSS Validator, JSONLint, and Google’s Rich Results Test.

- Test Imports:

- Run small imports:

python3 -m tools.db_load https://yourwebsite.com/rss Test-Content.

- Run small imports:

- Monitor Responses:

Here are some inspirational queries how you can check if your content was semantically understood:- E-commerce: “Show gaming laptops under $500” to verify accuracy, ensuring high-performance machines are sorted by specs.

- News: Query “Summarize 2024 election results in a specific region” provides regional breakdowns.

- Software Licensing: Query “Show software with that can be licensed for free and modified as wished” retrieves software under the MIT or similar,, ensuring compliance.

All in all, these A-U-S-S-I practices ensure NLWeb delivers precise, actionable responses, enhancing your AI-powered website and aligning with semantic web goals MIT Semantic Web.

Deploying Your NLWeb Ai knowlege Server

To deploy NLWeb as a scalable AI-powered knowledge hub, containerizing it with Docker and hosting it on Azure ensures reliability and accessibility. This section guides you through creating a Docker image, pushing it to Azure Container Registry (ACR), and deploying it on Azure App Service, based on Microsoft’s NLWeb repository and Azure’s containerization guides Azure App Service Containers. These steps, complemented by community insights Dev.to, prepare your NLWeb server for production, supporting use cases like news agency AI knowledge bases or blockchain AI agents for code licensing.

Step 1: Containerize NLWeb with Docker

Containerization packages NLWeb’s Python application for consistent deployment Docker Documentation.

- Create a Dockerfile: In the NLWeb project root, create Docker file or use ours from NLWeb/Dockerfile

- This uses a lightweight Python image, installs dependencies and includes several security features.

# Stage 1: Build stage

FROM python:3.10-slim AS builder

WORKDIR /app

# Copy requirements file

COPY code/requirements.txt .

# Install build dependencies and Python packages

RUN apt-get update && \

apt-get install -y --no-install-recommends gcc python3-dev && \

pip install --no-cache-dir --upgrade pip && \

pip install --no-cache-dir -r requirements.txt && \

apt-get clean && \

rm -rf /var/lib/apt/lists/*

# Stage 2: Runtime stage

FROM python:3.10-slim

# Update system packages for security

RUN apt-get update && \

apt-get upgrade -y && \

apt-get clean && \

rm -rf /var/lib/apt/lists/*

WORKDIR /app

# Create a non-root user and set permissions

RUN groupadd -r nlweb && \

useradd -r -g nlweb -d /app -s /bin/bash nlweb && \

chown -R nlweb:nlweb /app \

USER nlweb

# Copy application code

COPY code/ /app/

COPY static/ /app/static/

# Remove local logs and env files

RUN rm -r code/logs/* || true && \

rm -r .env || true

# Copy installed packages from builder stage

COPY --from=builder /usr/local/lib/python3.10/site-packages /usr/local/lib/python3.10/site-packages

COPY --from=builder /usr/local/bin /usr/local/bin

# Expose the port the app runs on

EXPOSE 8000

# Set environment variables

ENV PYTHONPATH=/app

ENV PORT=8000

ENV AZURE_VECTOR_SEARCH_ENDPOINT=""

ENV AZURE_VECTOR_SEARCH_API_KEY=""

ENV OPENAI_API_KEY=""

# Command to run the application

CMD ["python", "app-file.py"]- For usage information see. DOCKER.md. To build the Docker Image run:

docker build -t nlweb:latest .- Test locally:

export $(grep -v '^#' code/.env | xargs)

docker run -it -p 8000:8000 \

-v ./data:/data \

-e AZURE_VECTOR_SEARCH_ENDPOINT=${AZURE_VECTOR_SEARCH_ENDPOINT} \

-e AZURE_VECTOR_SEARCH_API_KEY=${AZURE_VECTOR_SEARCH_API_KEY} \

-e OPENAI_API_KEY=${OPENAI_API_KEY} \

iunera/nlweb:latest- Verify the app runs at http://localhost:5000

- Troubleshooting: If the build fails due to dependency issues (e.g., marshmallow), ensure

requirements.txtincludesmarshmallow==3.13.0. - Feel free to add any pull request or open github issues on the repo https://github.com/iunera/NLWeb

Step 2: Push to Azure Container Registry (ACR)

Store your Docker image in ACR for Azure deployment Azure Container Registry.

- Create an ACR: In the Azure portal, create a Container Registry (basic tier sufficient for testing).

- Log in to ACR:

az acr login --name <your-acr-name>- Replace <your-acr-name> with your registry name (e.g., nlwebacr).

- Tag and Push Image:

docker tag nlweb:latest <your-acr-name>.azurecr.io/nlweb:latest docker push <your-acr-name>.azurecr.io/nlweb:latest- This uploads the image to ACR Azure CLI Quickstart.

- Troubleshooting: Ensure Azure CLI is installed Azure CLI Install. If authentication fails, verify credentials with az login.

Step 3: Deploy to Azure App Service

Host NLWeb on Azure App Service for scalability Azure App Service.

- Create a Web App: In the Azure portal, create a Web App for Containers:

- Select your ACR image (<your-acr-name>.azurecr.io/nlweb:latest).

- Choose a Linux-based plan (e.g., B1 tier for testing).

- Configure Environment Variables: Set variables from your .env file (e.g.,

OPENAI_API_KEY,AZURE_VECTOR_SEARCH_ENDPOINT) in the App Service configuration. - Example:

AZURE_VECTOR_SEARCH_ENDPOINT=https://nlweb-db1.search.windows.net AZURE_VECTOR_SEARCH_API_KEY=<admin-key>OPENAI_API_KEY=<open ai key> - Details on Azure Environment Variables.

- Deploy and Test: Deploy via the portal or CLI:

az webapp config container set --name <app-name> --resource-group <group-name> --docker-custom-image-name <your-acr-name>.azurecr.io/nlweb:latest Access at https://<app-name>.azurewebsites.ne

- Access at

https://<app-name>.azurewebsites.netand test queries like “Show software licenses” Azure Container Apps. - Troubleshooting: If the app fails to start, check logs via az webapp log tail or on the Azure Portal. Verify port 5000 is exposed and environment variables are set correctly.

Step 4: Optimize for Production

Ensure your NLWeb server is production-ready Azure Best Practices.

- Scale with Azure: Enable auto-scaling in App Service to handle traffic spikes, as NLWeb’s scalability is limited Snowflake Blog.

- Secure the Deployment: Use Azure managed identities instead of admin keys for Azure AI Search, enhancing security Azure Security.

- Monitor Performance: Integrate Azure Application Insights to track query response times and errors.

- SEO Benefit: A stable, fast server improves user experience, boosting rankings for NLWeb server deployment Search Engine Journal.

Use Case: A news agency deploys NLWeb to handle “Summarize tech news” queries, scaling during breaking news events. A blockchain platform uses it for “License this code” queries, leveraging Azure’s reliability Circle Blog.

Including NLWeb in Your Webpage

Once your NLWeb server is deployed, integrating its AI chatbot into your webpage creates a seamless AI-powered website experience. This section outlines how to embed NLWeb using its JavaScript client, based on Microsoft’s NLWeb goals and web widget best practices Gorillasun Blog. The process involves adding a script, creating a container, and initializing the interface, ensuring compatibility with platforms like WordPress or custom sites WordPress Developer Guide.

Step 1: Add the NLWeb JavaScript Client

Include the NLWeb JavaScript library to enable the NLWeb chatbot interface:

- Include the Script: Assuming NLWeb provides a client (based on its reference implementation), add to your HTML <head> or <body>:

<script src="https://nlweb.microsoft.com/js/nlweb-client.min.js"></script>

- If no CDN exists, host the script locally from the NLWeb repo’s static folder (e.g., nlweb-client.js).

- Alternative: If NLWeb’s client isn’t available, use the index.html from NLWeb GitHub as a template, extracting the ChatInterface logic.

- Troubleshooting: Check for CORS issues if the script fails to load. Host locally or configure your server’s CORS headers MDN CORS.

Step 2: Create a Container for the Chatbot

Define where the NLWeb interface appears on your page.

- Add a Container: In your HTML, include:html

<div id="nlweb-container" style="height: 400px; width: 100%;"></div>

Adjust CSS for responsiveness (e.g., max-width: 600px for mobile). - Placement: Embed in a sidebar, footer, or dedicated page, depending on your site’s design (e.g., a “Chat with AI” section for news sites).

- Troubleshooting: Ensure the container’s ID matches the initialization script. Test visibility on mobile with Google’s Mobile-Friendly Test.

Step 3: Initialize the NLWeb Chatbot

Configure the chatbot to connect to your deployed server Wix Studio Forum.

- Initialize the Client: Add a script to initialize NLWeb:

<script> NLWeb.init({ container: 'nlweb-container', serverUrl: 'https://<app-name>.azurewebsites.net', mode: 'generate', theme: 'light' }); </script>container: Matches the <div> ID.serverUrl: Your Azure App Service URL.mode: Set to generate for intelligent responses NLWeb GitHub.theme: Customize appearance (if supported).

- Customize: Adjust settings like language or query limits based on NLWeb’s API (check GitHub Discussions for updates).

- Troubleshooting: If the chatbot doesn’t load, verify the serverUrl and check browser console for errors. Ensure the server is running (az webapp log tail).

Step 4: Optimize for User Experience

Ensure the chatbot enhances your site’s functionality:

- Enhance UX: Add a prompt suggestion like “Ask about our software licenses!” to guide users.

- Performance: Minify the JavaScript client and lazy-load it to reduce page load time Google PageSpeed Insights.

Future Outlook for NLWeb

NLWebs future potential spans multiple avenues:

- advanced AI model integration

- voice search optimization

- cross-platform interoperability

- community-driven extensions

- action-driven automation

- advanced code generation

- internationalization to support global audiences.

Let us discuss these possibilities in the following:

Advanced AI Model Integration

NLWeb’s model-agnostic design, currently supporting LLMs like OpenAI, paves the way for integrating advanced, multimodal AI models that process text, images, and voice OpenTools. A 2025 McKinsey report predicts multimodal AI will dominate enterprise applications by 2027, enabling richer interactions McKinsey – NLWeb has here potential to be “THE TOOL” for that. For instance, NLWeb could analyze shipment images in logistics or process voice queries for license management, enhancing its AI-powered website capabilities in Business 2 Business scenarios. Future integrations with models like Hugging Face or Google’s Gemini could expand NLWeb’s ability to generate code, reports, or visuals.

Voice Search Optimization

With 50% of searches projected to be voice-based by 2026, NLWeb’s natural language processing is well-positioned to capitalize on this trend. Future enhancements could optimize NLWeb for voice-driven queries, such as “Check shipment status” or “Renew my license,” using schema.org markup like SpeakableSpecification to boost discoverability Google Structured Data. This strengthens NLWeb’s role in voice search AI, especially for logistics and enterprise IT.

Cross-Platform Interoperability

NLWeb’s Model Context Protocol (MCP) server functionality suggests a future of seamless integration with other AI systems and platforms. A 2025 W3C report underscores the need for interoperable standards to unify AI ecosystems W3C Data Activity. NLWeb could support cross-platform workflows, enabling its generated code to interact with tools like Salesforce, SAP, or blockchain networks. For example, a logistics script could sync with a supplier’s ERP, or a license tool could integrate with cloud platforms, fostering a cohesive digital ecosystem.

Community-Driven Extensions

As an open-source project, NLWeb’s growth relies on community contributions GitHub Contributions. Developers could create plugins for new data formats (e.g., GraphQL), advanced actions, or industry-specific templates (e.g., logistics workflows). A 2025 IEEE Computer Society study highlights open-source communities as drivers of AI innovation . A vibrant ecosystem could make NLWeb as flexible as WordPress, supporting diverse sectors.

Action-Driven Automation

Schema.org actions are central to NLWeb’s potential, enabling code generation for task automation and dynamic interfaces W3C Semantic Web Activity. Actions like RequestAction and AllocateAction allow NLWeb to interpret tasks, generating code for workflows like those below. Future enhancements could support complex actions (e.g., WorkflowAction) to create full applications, reducing process times by 35%, per a 2025 Forrester report Forbes.

NLWeb-Based Code Generation: Custom User Interface Generation

NLWeb’s core function could even be extended to generate user interfaces or other code on demand. Imagine non tech users could query to create custom user interfaces tailored to specific user intent, a transformative capability for dynamic web experiences – Each user the own app for the own perception and perspective.

By interpreting actions like RequestAction or AllocateAction, NLWeb can produce not only functional scripts but also interactive UIs, such as logistics dashboards or license management consoles, generated on the fly.

A 2025 McKinsey report predicts that AI-driven UI generation could reduce development costs by 30% McKinsey. Imagine that applied: For example, NLWeb could generate a shipment approval UI with real-time order tracking or a license management interface with usage analytics, enhancing user engagement.

In the future, NLWeb could extend this to generate a custom UI, such as a dashboard displaying order weights, approval statuses, and delay alerts, tailored to different suppliers needs.

Internationalization

NLWeb’s global potential hinges on internationalization, enabling multilingual interfaces, localized workflows, and culturally adaptive AI responses. A 2025 Gartner report predicts 70% of enterprise AI solutions will support multiple languages by 2027 Forbes. NLWeb could integrate translation APIs or multilingual LLMs to process queries in languages like Spanish or Mandarin, adapting responses to cultural contexts (e.g., formal tones in Japanese support tickets). For example, logistics approvals could support multilingual supplier APIs, or license tools could offer localized terms, enhancing multilingual AI websites W3C Internationalization Activity. This would broaden NLWeb’s appeal in global markets, from European logistics to Asian IT sectors.

Current Challenges

NLWeb’s current state presents a mix of strengths, weaknesses, and obstacles that shape its path forward. Understanding these is crucial to assessing its potential and adoption trajectory.

What Works Well

NLWeb’s open-source flexibility is a major strength, allowing developers to customize its model-agnostic architecture for diverse use cases, from logistics to IT management GitHub. Its integration with schema.org actions enables practical automation, as seen in the examples below, where tasks like shipment approvals and license management are streamlined with data-driven insights.

Early adopters, such as Chicago Public Media, demonstrate success in niche applications, like news archive querying Microsoft News.

The A-U-S-S-I framework ensures data is structured and accessible, aligning with semantic web principles and supporting robust AI interactions. These strengths position NLWeb as a promising tool for tech-savvy teams and enterprises with resources to invest.

What Falls Short

Despite its promise, NLWeb’s results often disappoint due to inconsistent AI outputs and resource-intensive setup. The LLM-driven responses, while capable, can produce inaccurate or incomplete code, especially for complex queries, requiring manual debugging tools.

The setup process, involving Azure AI Search, Docker, and API configurations, is technically complex and costly, with Azure instances incurring expenses even when idle Azure Pricing. Data preparation, such as creating RSS feeds or JSON-LD annotations, demands significant effort, echoing the semantic web’s historical challenges with RDF and OWL IEEE Spectrum.

These shortcomings make NLWeb less accessible to small businesses or sole website owners, limiting its mainstream appeal.

Opportunities Ahead

NLWeb’s opportunities are vast. In B2B, automation could save millions, as seen in logistics and IT examples, with a 2025 Gartner report forecasting 60% enterprise AI adoption by 2027 Forbes.

In consumer markets, voice search and multilingual support could drive engagement, particularly in mobile and IoT contexts TechCrunch.

The open-source model invites innovation, potentially reviving the semantic web through practical, multilingual, and interoperable solutions. By simplifying deployment and expanding action vocabularies, NLWeb could become a cornerstone of the agentic web, as hinted in its roadmap Microsoft News.

Obstacles to Overcome

Several obstacles hinder NLWeb’s adoption:

- Scalability Issues: NLWeb struggles, in our opinion, with high-traffic scenarios requiring advanced cloud optimization, not to forget the AI costs for the website owner.

- Adoption Barriers: Limited community engagement, with only 1,200 GitHub stars as of May 2025, slows development GitHub. Without a critical mass of contributors, NLWeb risks stagnating, like early semantic web tools MIT Semantic Web.

- Lack of Simplified Deployment: The absence of a managed SaaS model or lightweight plugin alienates non-technical users, who face a steep learning curve is a problem for easy adaption.

- Standardization Gaps: Limited schema.org action vocabularies and inconsistent API support across platforms hinder interoperability, as highlighted in a 2025 W3C report W3C Data Activity. This complicates cross-platform workflows, such as integrating logistics scripts with global ERPs.

These challenges mirror the semantic web’s struggle to balance innovation with usability. While NLWeb’s open-source model fosters experimentation, its complexity and resource demands could deter widespread adoption unless addressed through community contributions or simplified deployment options GitHub Contributions.

Conclusion – what we think about NLWeb

NLWeb, unveiled at Microsoft Build 2025, offers a transformative approach to turning websites into AI-powered knowledge hubs, blending conversational AI with the promise of the semantic web Microsoft News.

This article provided a holistic exploration of NLWeb’s capabilities, delivering a detailed setup guide for configuring it with Azure AI Search and OpenAI, optimizing data using the A-U-S-S-I framework (Accessible, Understandable, Structured, Semantic, Interlinked), and deploying it via Docker on Azure App Service.

We demonstrated seamless webpage integration through a JavaScript chatbot, enabling natural language interactions for diverse users.

Through compelling use cases, we showcased NLWeb’s potential to enhance e-commerce engagement, empower news agencies to create proprietary AI knowledge bases amid AI crawler restrictions, and enable developers to pioneer blockchain AI agents for schema.org actions. Our outlook explored future avenues like internationalization, voice search optimization, cross-platform interoperability, community-driven extensions, advanced AI integration, and custom UI generation.

NLWeb’s promise aligns with emerging trends, particularly the rise of voice search and conversational interfaces. With 50% of searches projected to be voice-based by 2026, NLWeb’s natural language capabilities position it to capitalize on this shift, enabling intuitive user experiences according to TechCrunch. Its agentic potential, driven by schema.org actions, hints at a future where websites act as autonomous hubs, executing tasks like procurement, licensing, or workflow automation via AI. The logistics and license management examples illustrate this, generating code and potential UIs for dynamic, data-driven processes. Internationalization could further amplify NLWeb’s reach, supporting multilingual interfaces and localized workflows, while voice search and interoperability promise seamless integration with global ecosystems.

However, adoption remains a critical hurdle. As Snowflake’s blog notes, NLWeb’s success depends on community-driven innovation, with only 1,200 GitHub stars indicating slow traction as of May 2025 GitHub. Without widespread developer and business uptake, NLWeb risks fading like earlier semantic web efforts, which struggled due to complexity and limited incentives IEEE Spectrum. Technically, NLWeb poses significant challenges, especially for sole website owners. Setting up an Azure instance, containerizing with Docker, and maintaining a server—even when unused—incurs substantial costs and effort Azure Pricing. Unlike a simple SaaS plugin, deploying NLWeb demands expertise in configuring APIs, optimizing data pipelines, and managing cloud infrastructure, creating a steep barrier for non-technical users Microsoft Azure Documentation.

The results of NLWeb, while promising, often fall short of expectations, echoing challenges from the semantic web era. The effort to label, annotate, and interlink data using the A-U-S-S-I framework is meticulous, requiring time and expertise akin to the RDF and OWL complexities that hindered earlier semantic initiatives W3C RDF Primer. Even with AI-assisted tools, preparing RSS feeds, embedding schema.org markup, or defining JSON actions remains resource-intensive, potentially deterring widespread adoption. Scalability issues further complicate its readiness for high-traffic scenarios, and inconsistent AI outputs necessitate manual intervention, undermining reliability.

The potential for semantic actions, however, is immense, particularly in B2B and supply chain scenarios. Actions like LicenseAction or SearchAction could enable efficient B2B marketplaces, reducing friction in enterprise procurement. Imagine a supply chain platform where NLWeb processes “Procure 100 units of X” and executes a blockchain transaction, or a developer generating a Python script with an AI action that automates licensing of used libraries in the software. Even if NLWeb would fail in the consumer space, its semantic actions could revolutionize enterprise workflows, much like niche semantic web applications persisted despite mainstream challenges, according to IEEE Spectrum.

Running your own AI with NLWeb raises profound questions about the future of search. On a large scale, if every website hosts its own AI knowledge base, traditional search engines like Google may face disruption, as users query site-specific AIs. This could democratize search but also fragment it, raising concerns about data silos, interoperability, and AI bias. How will users discover niche AIs? Will standards like the Model Context Protocol (MCP) unify these systems and what are the business models then? How do the content creators get the funds for their content? These questions remain open, underscoring NLWeb’s ambitious vision to reshape digital ecosystems.

Ultimately, the key NLWeb consumer adoption question is whether business models can monetize the effort of NLWeb integration and data labeling. NLWeb will only succeed if businesses, publishers, and developers can leverage their investments. The significant time, expertise, and financial resources required for setup, deployment, and data optimization must yield tangible returns, or NLWeb risks remaining a visionary but underutilized tool.

Despite these challenges, NLWeb’s alignment with voice search, internationalization, and action-driven automation positions it as a potential leader in the agentic web.

We invite you to explore NLWeb’s capabilities at GitHub, contribute to its development, and share your perspective with us on X or bluesky.

FAQ

What is NLWeb, and how does it work?

NLWeb is Microsoft’s open-source protocol (Build 2025) for creating AI-powered knowledge hubs with natural language interfaces. It processes website data (e.g., RSS, JSONL) using AI models to answer user queries like “Find budget laptops.” Websites become conversational apps, leveraging schema.org actions and the Model Context Protocol (MCP) for agentic interactions

Why should businesses use NLWeb in 2025?

NLWeb enhances user engagement with AI-driven chatbots, supports voice search (50% of searches by 2026), and ensures data control against AI crawlers. It’s ideal for e-commerce, news, and blockchain, offering scalability and flexibility. Businesses can create niche AI knowledge bases, driving traffic and monetization.

What are the key benefits of NLWeb for websites?

NLWeb improves engagement with natural language queries, scales with model-agnostic design, delivers data-driven responses, and supports diverse use cases (e-commerce, news, blockchain). It aligns with the semantic web, enabling intuitive interfaces and controlled content access, vital as 88% of news outlets block AI crawlers.

How does NLWeb compare to traditional chatbots?

Unlike traditional chatbots, NLWeb offers site-specific AI knowledge bases, leveraging schema.org actions and user data for tailored responses. It’s model-agnostic, supports voice search, and integrates with the Model Context Protocol (MCP) for agentic web interactions, providing greater control and flexibility.

Is NLWeb free to use for website owners?

NLWeb is open-source and free to use, but associated costs arise from Azure hosting, API usage (e.g., OpenAI), and data preparation. Small setups can use Azure’s free tier, while larger deployments require paid plans, impacting scalability.

How do I set up NLWeb on my computer?

Clone the NLWeb repository (git clone https://github.com/iunera/NLWeb), create a virtual environment (python3 -m venv myenv), install dependencies (pip install -r requirements.txt), and configure the .env file. This ensures a clean setup for AI-powered websites.

What is the A-U-S-S-I framework for NLWeb?

The A-U-S-S-I framework (Accessible, Understandable, Structured, Semantic, Interlinked) optimizes data for NLWeb. It ensures machine-readable (RSS), logically organized (tables), and semantically rich (schema.org) content, enhancing AI query accuracy for knowledge hubs.

How do I configure an OpenAI API key for NLWeb?

Create an OpenAI project at platform.openai.com, generate an API key, and add it to code/.env (OPENAI_API_KEY=<your-key>). Edit config_embedding.yaml and config_llm.yaml to set preferred_provider: openai, enabling natural language processing.

What is Azure AI Search, and why is it used in NLWeb?

Azure AI Search is a vector store for NLWeb, enabling efficient data retrieval for AI queries. Configure it in the Azure portal, add the URL and admin key to .env, and set preferred_endpoint: azure_ai_search in config_retrieval.yaml.

How do I import data into NLWeb’s vector store?

Run python3 -m tools.db_load <rss-url> <dataset-name> to import data (e.g., RSS feeds) into Azure AI Search. Troubleshoot issues like marshmallow errors by installing marshmallow==3.13.0. This prepares data for AI knowledge hub queries.

What is the Model Context Protocol (MCP) in NLWeb?

MCP, developed by Anthropic, connects AI models to data systems. Each NLWeb instance acts as an MCP server, making content discoverable by AI agents, enhancing agentic web interactions.

How does the A-U-S-S-I framework optimize NLWeb data?

A-U-S-S-I ensures data is Accessible (RSS feeds), Understandable (logical structures), Structured (tables), Semantic (schema.org), and Interlinked (internal links), enabling NLWeb to deliver precise AI knowledge hub responses.

What is the role of RSS feeds in NLWeb?

RSS feeds provide accessible, standardized data for NLWeb indexing. Optimize feeds with <description>, <category>, <pubDate>, and <link> tags to enable queries like “Show recent articles”.

What is JSONL, and how does NLWeb use it?

JSONL (JSON Lines) stores structured data (e.g., {id, title, content, metadata}) for NLWeb. Each line is a JSON object, imported with python3 -m tools.db_load, enabling semantic queries like “List MIT-licensed tools”

Why is semantic HTML important for NLWeb?

Semantic HTML (<article>, <section>, <table>) ensures NLWeb’s AI can parse content logically, improving query accuracy. Clean HTML avoids JavaScript-heavy rendering issues, aligning with A-U-S-S-I principles Google Webmaster Guidelines.

How do I validate NLWeb data imports?

Use W3C Feed Validator for RSS, JSONLint for JSONL, and Google’s Rich Results Test for schema.org. Test imports with python3 -m tools.db_load <url> Test-Content and query responses to ensure AI knowledge hub accuracy.

How does interlinking content improve NLWeb performance?

Interlinking with tags, categories, and anchors (e.g., “Explore MIT licenses”) helps NLWeb understand relationships, improving query accuracy for AI knowledge hubs.

Can NLWeb handle unstructured data?

NLWeb prefers structured data (RSS, JSONL, schema.org) but can process unstructured data with preprocessing. Use AI tools to convert text into A-U-S-S-I-compliant formats for better AI query results.

How do I deploy NLWeb on Azure?

Create a Docker image (docker build -t nlweb:latest), push to Azure Container Registry (docker push <acr>.azurecr.io/nlweb:latest), and deploy via Azure App Service. Configure .env variables for AI knowledge hub functionality.

How do I embed NLWeb’s chatbot on my website?

Add the NLWeb JavaScript client (<script src=”https://nlweb.microsoft.com/js/nlweb-client.min.js”>), create a container (<div id=”nlweb-container”>), and initialize with NLWeb.init({container: ‘nlweb-container’, serverUrl: ‘<azure-url>’}) for AI chatbot integration

Can NLWeb scale for high-traffic websites?

NLWeb’s scalability is limited without cloud optimization. One can clone the NLWeb service and loadbalance it. However, at the moment high traffic will also cause high AI costs for the Website owner…

How does NLWeb help news agencies combat AI crawlers?

NLWeb enables news agencies to host proprietary AI knowledge bases, blocking crawlers (88% of outlets do, per Wired) while offering natural language queries like “Summarize 2024 news.” This retains traffic and monetizes content.

How will NLWeb support voice search in 2025?

NLWeb’s natural language processing aligns with the 50% voice search trend by 2026. Future optimizations with SpeakableSpecification could enable queries like “Check shipment status,” boosting voice search AI

How does NLWeb align with the agentic web?

NLWeb’s schema.org actions and MCP server functionality enable agentic web interactions, where websites act as autonomous hubs for tasks like licensing or procurement, redefining digital ecosystems.

What are the main challenges of using NLWeb?

NLWeb faces technical complexity, high Azure costs, inconsistent AI outputs, and data annotation efforts. Scalability and limited community adoption (1,200 GitHub stars) are hurdles.

Why is NLWeb’s setup complex for small businesses?

NLWeb requires Azure expertise, Docker, and API configurations, with ongoing costs. Data preparation (RSS, JSONL) is time-intensive, making it less accessible for non-technical users.